What DataUsa is doing could be – I guess – the next step in the evolution of Open Government Data websites. It’s the step from offering file downloads to presenting data (and not files) interactively. And it’s a kind of presentation many official statistical websites would surely be proud of.

César A. Hidalgo from MIT discusses the philosophy behind this. More at the end of this post; at first a short look at this website.

Bringing data together

Merging data from different sources may have been the most expensive and challenging task and the conditio sine qua non for the existence of this website. And perhaps it’s more an organizational than a technical challenge.

Seven public data sources are accessible via DataUsa

Presenting data

Adapting to what internauts normally do, the main entrance is a search bar;

Thematical and geographical profiles are available, too. But in a hidden menu.

The presentation of the data is a mix of generated text and various types of graphs.

The option above every graph allows to share, embed, download, get a table and even an API for the data.

And finally thematical maps provide other views and insights:

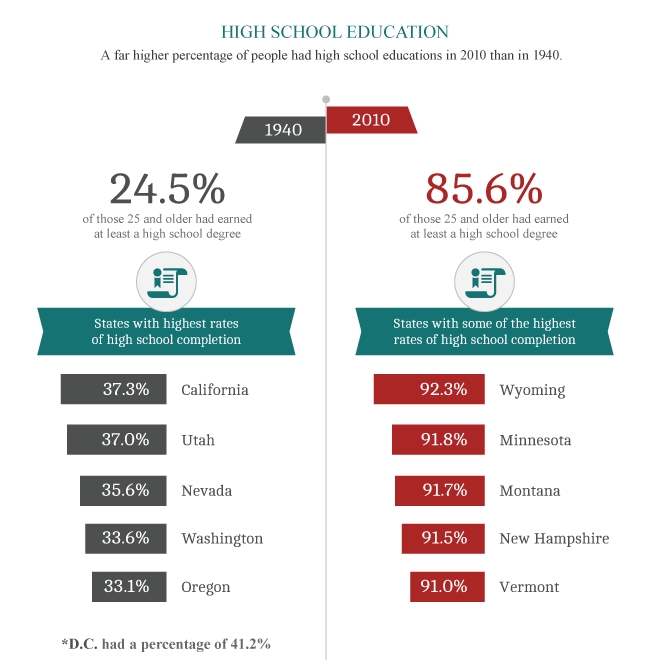

Storytelling

But the fascinating part is Stories

Various authors write stories focussing on special topics and using the presentation techniques of the site.

Background

A glossary explains technical terms and the About Section presents the authors and their aim:

‘In 2014, Deloitte, Datawheel, and Cesar Hidalgo, Professor at the MIT Media Lab and Director of MacroConnections, came together to embark on an ambitious journey — to understand and visualize the critical issues facing the United States in areas like jobs, skills and education across industry and geography. And, to use this knowledge to inform decision making among executives, policymakers and citizens.’

And this leads to the

Philosophy behind

César A. Hidalgo, one of the websites’ authors explains why they did what they did in a blog post with the title ‘What’s Wrong with Open-Data Sites–and How We Can Fix Them.’

Here’s the design philosophy in a visual nutshell:

‘Our hope is to make the data shopping experience joyful, instead of maddening, and by doing so increase the ease with which data journalists, analysts, teachers, and students, use public data. Moreover, we have made sure to make all visualizations embeddable, so people can use them to create their own stories, whether they run a personal blog or a major newspaper.’

And:

‘After all, the goal of open data should not be just to open files, but to stimulate our understanding of the systems that this data describes. To get there, however, we have to make sure we don’t forget that design is also part of what’s needed to tame the unwieldy bottoms of the deep web.’