The end for the traditional statistical yearbooks – be they printed or as ebooks – is approaching gradually.

The German yearbook has recently been hit. The last edition had its farewell at a press conference on 30 October 2019:

“Digitisation is shaping the statistics of the 21st century. The expansion of our digital communication is necessary if we want to remain the leading provider of statistical information about Germany. We say goodbye to the Statistical Yearbook, which stood for our activities for almost seven decades. The yearbook goes, but the data remains. They are already available via our online services in greater abundance than ever before. ….

One thing is clear: Rigid reference books are hardly in demand today. The trend is towards up-to-date, digitally available information. The information is researched online.”

Source: Press conference destatis, 30 October 2019. Original in German.

Digi…. ?

The rationale for abolishing printed yearbooks is always the same: digit(al)ization continues, users have new needs and go online.

The three D’s.

What is meant by digit(al)isation?

‘Digitization essentially refers to taking analog information and encoding it into zeroes and ones so that computers can store, process, and transmit such information. …

We refer to digitalization as the way in which many domains of social life are restructured around digital communication and media infrastructures.”

Instead, … digital transformation … refers to the customer-driven strategic business transformation that requires cross-cutting organizational change as well as the implementation of digital technologies.

In the final analysis, therefore, we digitize information, we digitalize processes and roles that make up the operations of a business, and we digitally transform the business and its strategy. Each one is necessary but not sufficient for the next, and most importantly, digitization and digitalization are essentially about technology, but digital transformation is not. Digital transformation is about the customer.

Source: Forbes

Digital alternatives

There is no doubt that the Internet as a source of information is a priority, the first step does not go to the bookshelf, but digitally, to the smartphone, tablet or PC.

Digitization has taken place, everything is available in binary form.

And also digitalization in the form of digital types of information and communication: There are comprehensive websites of statistical institutions, some with more, some with less sensitive user guidance. And there are many interactive databases of these institutions, too.

When users get to these sources, they await some work to find their way around, searching databases, before a table, a file or a simple website appears on the (often too small) screen.

Table-based yearbooks

After the end of the German Statistical Yearbook, there is a comprehensive alternative offer for the content: More tables, graphs and methodological explanations can be found on the web – with a little more effort, not concentrated. it’s like leaving a small, manageable town and having to find your way around a big city. And it is no longer a physically tangible object, guaranteed to be accessible over a long period and no longer – as a book can be – a visible showpiece and image carrier of the institution.

Yearbooks with stories to tell

A specialty of traditional yearbooks is their texts. They offer a certain kind of storytelling. This is quite demanding because it is more than just boring retelling of table contents and it must not get involved in controversial or even politically colored explanations. Describing the context in the various thematic areas and pointing out remarkable developments make them stand out. They help to get a quick first orientation in the extensive data.

Here are a few examples of such storytelling yearbooks and how they – whether discontinued or not – have responded to the trend to digit(al)ization.

Canada till 2012

The Canadian Statistical Yearbook was an early standard for yearbooks that wanted to present a country and its international position in an attractive and widely understandable form.

‘Presented in almanac style, the 2012 Canada Year Book contains more than 500 pages of tables, charts and succinct analytical articles on every major area of Statistics Canada’s expertise. The Canada Year Book is the premier reference on the social and economic life of Canada and its citizens.

This publication has been discontinued as of April 2013. The last issue of this publication was November 2012.The Canada Year Book 2006 to 2012 is available online in html and pdf formats.’

After some changes, it was closed in 2013. There is no digital alternative, unless – similar to the German solution – there is a thematically ordered overview of data, analyses, and references

Netherlands

The Dutch statistical yearbook was early converted from a printed to a PDF version. Abolished under the title Yearbook, but then continued as Trends in the Netherlands in 2014.

The yearbook went, ‘Trends in the Netherlands’ came – even with more storytelling than before. To be found on the homepage.

Switzerland

The Swiss Statistical Yearbook is one of the last international editions still to be printed. And it is a comprehensive, multimedia, thematically organized reference work: infographics, extensive texts, tables, references in two languages and abstracts in two other languages make it widely accessible.

Digitalization has not passed this yearbook by either. Older editions can be consulted on the Office’s website and the text of the current yearbook is included as an introductory panorama in each of the thematic pages on the web.

The Panorama: An excerpt from the current printed yearbook, format pdf:

Eurostat

Most existing yearbooks entered the era of digit(al)ization entirely through file lists and interactive databases or through PDF versions. Eurostat has been going the other way for several years. The idea of a storytelling book functioning as a unit has been implemented digitally from the very beginning. And last but not least, with an educational intention that promotes statistical literacy. This edition is therefore also called Statistics Explained.

In each topic, this website finally leads to the all-embracing world of digital data and databases.

What else …. ?

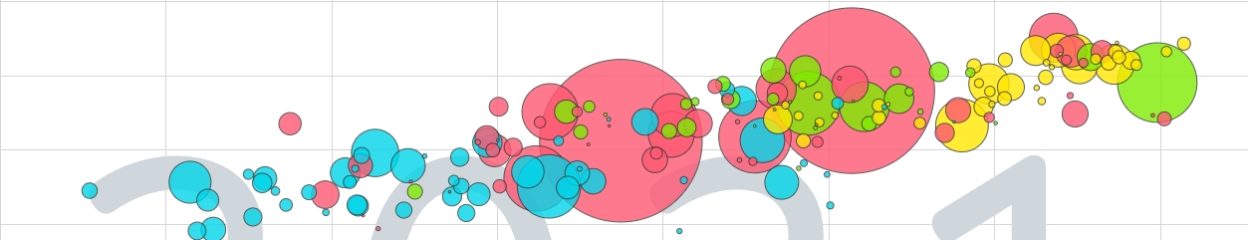

Statistical yearbooks encounter digit(al)ization in very different ways: they disappear into (interactive) databases on the web, survive as PDF editions (more or less well integrated into websites) or celebrate a kind of resurrection in web-based book-like products.

The strengths of yearbooks (especially those based on storytelling) are thus more or less lost: For example, a professionally curated, guaranteed reliable, easily usable and explained introduction to the essential data topics in one place and guaranteed to be available for many years to come. And an ever more extensive and better presented world of data on the Internet has emerged. An accessible wealth of information, of which one could hardly dream a few years ago.

But no matter how developed this data offer may be, it still lacks some simplicity and quick access to the right data. Anyone who has ever searched for data on different topics and over different periods knows how frustrating this can be. Which in the mass of partly similar files is the right one? How can various topics be combined in databases? How can the different time series be combined?

Is it the right data for the question asked, can I use it without risk? Perhaps there are nuances in the method or definition of the data and they should not be compared with other data?

But often users don’t even come to the official sources, because the most common change in user behavior is googling. And the result may be a single figure or a large amount of links to very different sources

… digital transformation.

Statistical institutions are making great efforts in the field of digital innovation, as shown not least by the sometimes very attractive offerings. Many are working on so-called experimental statistics: Coding data faster and better with the help of artificial intelligence, creating and extracting indicators from big data and much more. All this should make the production of statistical data more efficient, less dependent on human intervention (and human error) and faster. In the field of data dissemination, such experiments are still lacking, at least to this day.

Are all these innovations the often mentioned digital transformation?

At best, they are elements of it.

What digital transformation can users dream of?

Perhaps that statistical information is produced in a rapid and uninterrupted process (like in a pipeline) and is provided with semantic information in such a way that a simple search over topics and periods delivers an unambiguous result and refers to important context information. That even in a digital transformation human intervention will still be necessary (at the latest in presentation, explanation, and support), is not a paradox: Perhaps the overall package of digital transformation also includes non-digital elements, dedicated print products that skilfully lead into the digital world.